Test Your AI Agents Against Real Security Threats: OWASP & Beyond

Comprehensive security diagnostics for AI agents with actionable insights and privacy-focused actionable evaluation reports.

Trusted by industry leaders

Enterprise-grade security

Comprehensive AI Agent Protection

Protect your AI investments with our advanced security platform

Actionable Security Insights

Get detailed reports with clear remediation steps for every vulnerability detected in your AI agents.

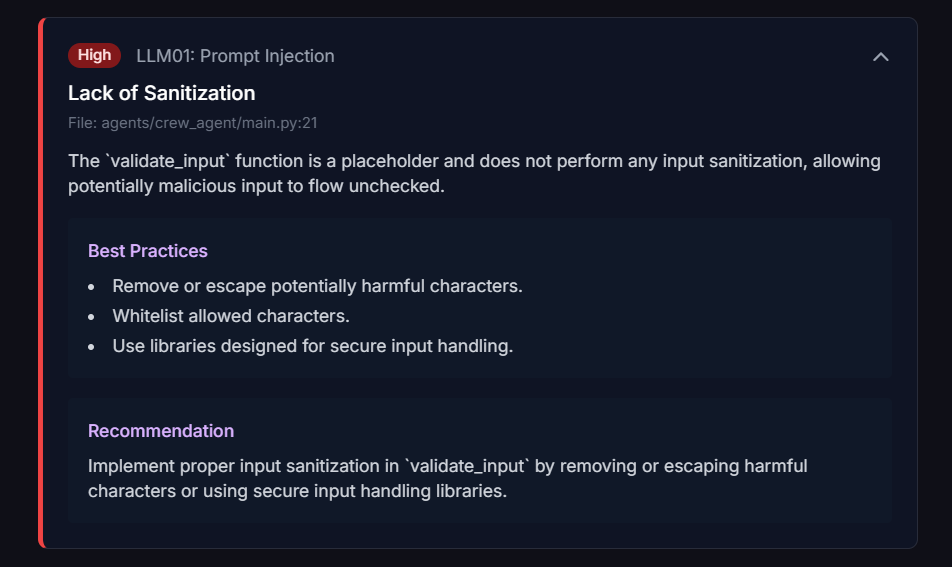

Detailed Vulnerability Reports

- Clear explanations of each vulnerability with step-by-step remediation guides.

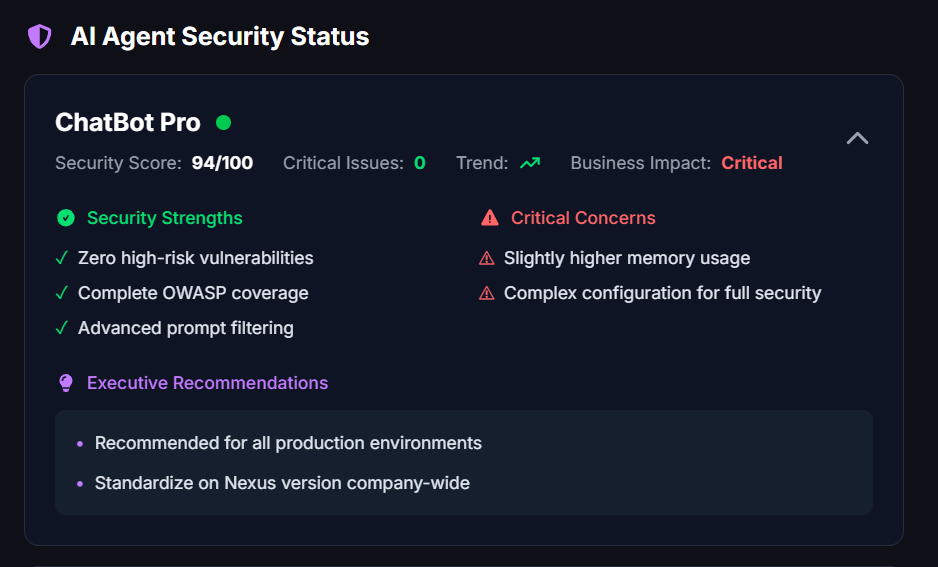

Executive Summaries

- High-level overviews for leadership with business impact analysis.

Security Dashboard

Interactive visualization of your agent's security posture

Privacy Ensured

Your data stays secure with our privacy-first approach

Privacy-First Approach

We prioritize your data security with multiple deployment options to meet your privacy requirements.

On-Premises Deployment

- Keep all data within your infrastructure with our self-hosted option.

Data Encryption

- All data is encrypted in transit and at rest for maximum security.

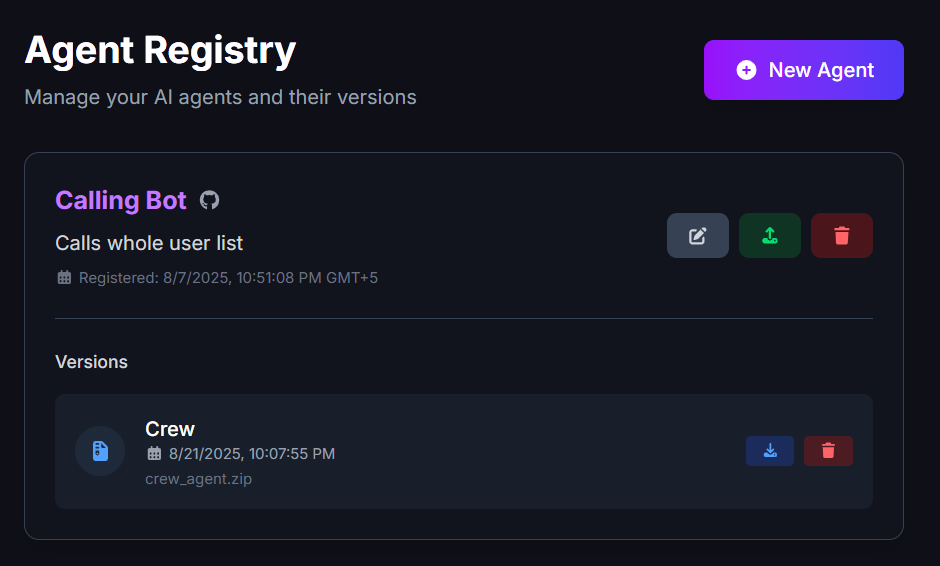

Enterprise Integration

Test your AI agents whether they're hosted on GitHub, in a private repo, or locally on your machine.

GitHub Integration

- Connect directly to your GitHub repos for seamless testing.

API Access

- Integrate with your CI/CD pipeline for automated security testing.

Seamless Integration

Works with your existing development workflow

Process

AI Security Evaluation in 3 Simple Steps

Get actionable security insights quickly and efficiently

Connect Your Agent

Link your AI agent via GitHub, API, or upload your code directly to our secure platform.

GitHub integration for seamless connection

API access for automated testing

Run Security Tests

Our platform automatically tests your agent against all OWASP Top 10 vulnerabilities and beyond.

Comprehensive OWASP Top 10 coverage

Additional advanced security checks

Review & Remediate

Get detailed reports with prioritized vulnerabilities and clear remediation steps.

Interactive dashboard with visualizations

Detailed JSON output for integration

Security Standard

Complete OWASP Top 10 for LLMs Coverage

Comprehensive testing for all critical security risks in Large Language Models

LLM01: Prompt Injection

Manipulating LLMs via crafted inputs to produce unintended behaviors, bypassing filters or extracting data.

LLM02: Sensitive Information Disclosure

Inadequate validation or sanitization of LLM outputs before processing by other components.

LLM03: Supply Chain

Compromising training data to manipulate model behavior or introduce vulnerabilities.

LLM04: Data and Model Poisoning

Causing resource exhaustion through specially crafted inputs that lead to service degradation.

LLM05: Improper Output Handling

Risks from compromised components, datasets, or plugins in the LLM ecosystem.

LLM06: Excessive Agency

LLMs revealing confidential data in responses, including training data memorization.

LLM07: System Prompt Leakage

LLM plugins accepting free-form text inputs that can exploit the plugin's insufficient input validation.

LLM08: Vector and Embedding Weaknesses

LLM-based systems with excessive functionality, permissions, or autonomy leading to unintended consequences.

LLM09: Misinformation

Systems or people overly trusting LLM outputs without supervision leading to potential errors.

LLM10: Unbounded Consumption

Unauthorized access, copying, or exfiltration of proprietary LLM models.

Integrations

Works With Your Existing Stack

Seamlessly integrate with your development workflow and infrastructure

GitHub Integration

Connect directly to your GitHub repositories for automated security testing in your existing workflow.

API Access

Integrate security testing into your CI/CD pipeline with our comprehensive REST API.

On-Premises Deployment

Keep all data within your infrastructure with our self-hosted deployment option.

Why It Matters

The Business Case for AI Security

Protecting your AI systems is no longer optional - it's essential

Security Breaches Are Costly

Prevent financial and reputational damage

Financial & Reputational Impact

Security breaches in AI systems can lead to significant financial losses and damage to your organization's reputation. A single vulnerability can compromise sensitive data and erode customer trust.

The average cost of a data breach is $4.45 million according to IBM's 2023 report

60% of small businesses close within 6 months of a cyber attack

Regulatory Compliance

With increasing regulations around AI systems (EU AI Act, NIST AI RMF, etc.), security testing is becoming a legal requirement, not just a best practice.

The EU AI Act imposes fines up to 7% of global turnover for non-compliance

NIST AI Risk Management Framework provides guidelines for secure AI development

Regulatory Compliance

Meet legal requirements for AI systems

Customer Trust

Build confidence in your AI solutions

Building Customer Trust

Security testing demonstrates your commitment to responsible AI development, building trust with customers and stakeholders.

83% of customers will stop using a service after a data breach

Companies with strong security practices see 23% higher customer retention

Ready to secure your AI agents?Start testing today.

Join enterprise AI teams who trust Aginiti for their security testing needs.

Experience the Platform